Featured Research

Tokenizers are All we Need

A grounded walkthrough of how modern tokenizers work, why they matter, and how their hidden mechanics shape efficiency, language handling, and real-world model performance.

Why India Needs Its Own LLMs

The future of AI in India depends on building language models that understand our culture, context, and languages. This essay explores why we cannot rely solely on Western models and what makes Indian LLMs essential for our digital future. Language is not just communicationÃÂâÃÂÃÂÃÂÃÂit carries centuries of cultural knowledge, regional nuances, and contextual understanding that cannot be translated or approximated.

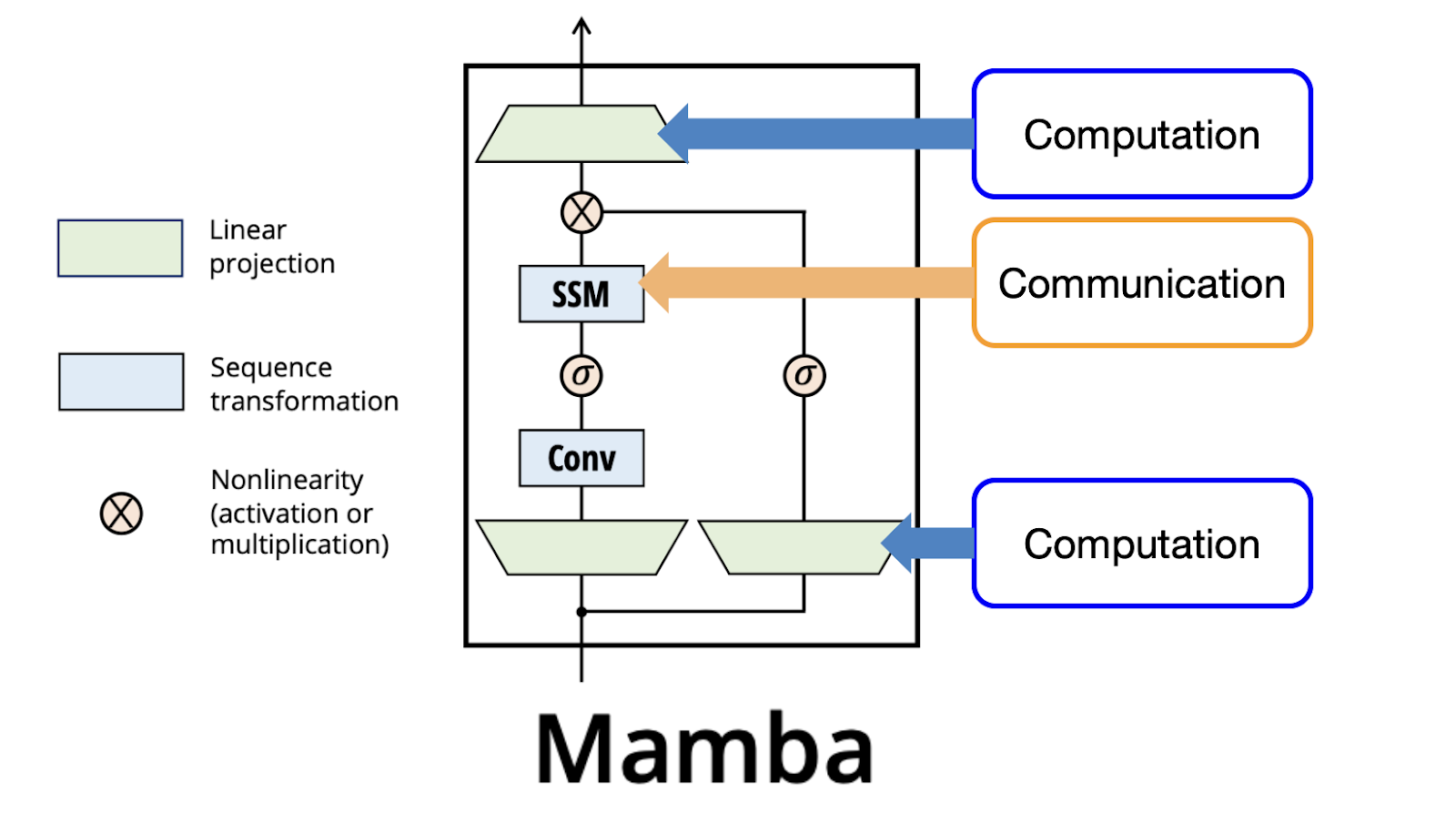

Diving Deep into the Mamba Model Implementation

A technical exploration of implementing the Mamba model in PyTorch, breaking down the architecture, key components, and challenges.

Contribute to Bhasa's Research

Join us in exploring the future of language and technology.

Contact Research Team